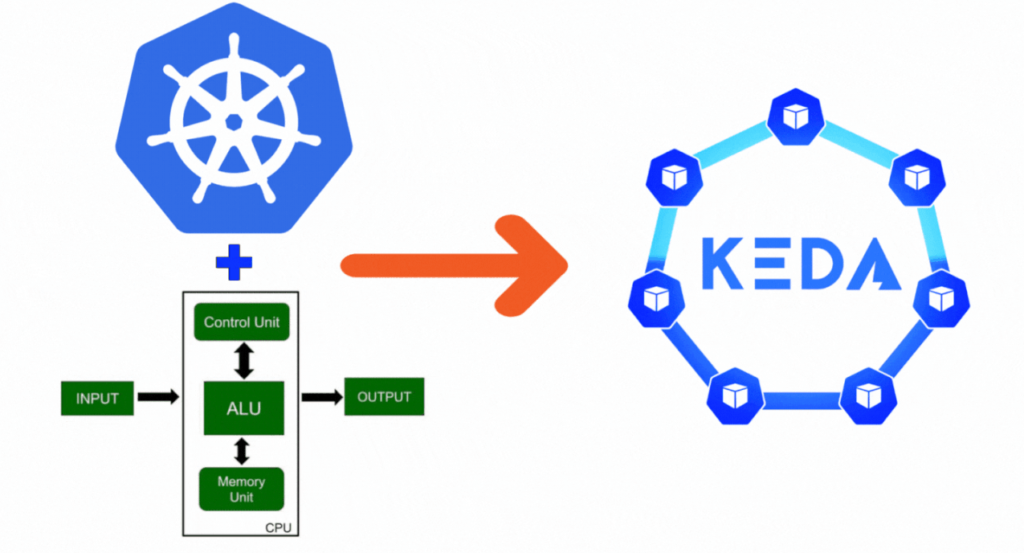

The need for more reliable, adaptable, and effective cloud management solutions KEDA is growing along with contemporary applications. One of the top container orchestration platforms, Kubernetes, has revolutionised the way businesses grow, deploy, and maintain their applications. Traditional scaling techniques, however, occasionally need to be updated to optimise resource efficiency and reduce expenses as applications grow in complexity and demand. This is where sophisticated scaling methods, such those provided by KEDA, are useful. The dynamic reaction that traditional autoscaling lacks is made possible by KEDA, or Kubernetes Event-Driven Autoscaling, which enables Kubernetes to respond in real-time to particular events or demands.

Table of Contents

Understanding Kubernetes Scaling and the Role of KEDA

When it comes to automating the deployment, scaling, and management of containerised applications, Kubernetes is a very powerful orchestration platform. The Horizontal Pod Autoscaler (HPA), which adjusts resources according to CPU or memory usage, often manages resource allocation and provisioning for Kubernetes. That works well for a lot of applications, but it doesn’t cover all of them, particularly for those that require more sensitive control based on event-based or external factors. The main disadvantage of this strategy is that Kubernetes lacks the ability to scale applications in response to events, such as HTTP request loads, database events, or message queue lengths. This is where KEDA enters the picture. Since Kubernetes can scale up and down quickly and accurately without overprovisioning, this is especially crucial for applications with erratic workload changes. For example, because KEDA can automatically alter resources as needed, a web-based store during a flash sale or a social network that receives high traffic may greatly benefit. Developers can specify scaling criteria using KEDA by leveraging events from several sources, such as Prometheus, Azure Monitor, RabbitMQ, and others. Because of this, is a crucial component of the Kubernetes toolkit, particularly for businesses that require an efficient method of managing demand-related resources.

Using Custom Metrics to Scale with Accuracy

Custom metrics are arguably one of KEDA’s greatest advantages out of all of its features. With developers can specify unique scaling criteria, whereas regular Kubernetes HPA typically works with CPU and memory utilisation. As a result, there are many chances to advance applications in ways that are directly related to the current technological or business requirements. One application, for example, works with real-time stock market data. KEDA has the ability to scale the program according on the rate at which data is received, as opposed to relying on generic CPU or memory utilisation. In order to prevent the application from slowing down or using more resources than necessary at times when there is less incoming data, KEDA can scale the processing pods to the necessary quantity if the flow of market data increases. Businesses with high response time sensitivity and cost considerations benefit from this kind of scale fine-tuning.

Optimizing Costs with Idle Scaling and Burst Handling

When applications must handle extremely variable loads and cost optimisation is required, KEDA performs exceptionally well. For the same reason, other general autoscaling models might not be very effective at cost optimisation; they often allot a specific amount of resources even when they are not required. In contrast, KEDA proposes idle scaling, which allows an application to scale up to the necessary level in an instance and down to zero during idle intervals. Applications that don’t run frequently, such task schedulers, batch processors, and API services, benefit from this idle scalability feature. While active, KEDA consumes resources, which means that they are only consumed if they are needed, thus reducing the costs heavily. Another benefit for businesses with erratic usage patterns is KEDA’s ability to operate in a bursty mode and during unexpected spikes in demand.

Real-World Use of KEDA

The practical applications of KEDA show how applicable the method is across a range of domains. KEDA is used in logistics and transportation to handle demand fluctuations caused by the quantity of packages that need to be handled or the number of tracking queries. In order to guarantee that the stream runs smoothly during periods of high traffic and prevent needless resource allocation during periods of low traffic, streaming platforms in the media and entertainment industry use to scale up or down the resources based on the number of viewers in a given instance. IoT (Internet of Things) systems are another intriguing application, where data generated by millions of devices needs to be examined right away. In order to improve response speeds and reduce latency, the KED facilitates the scaling of IoT applications based on the volume and rate of data entering the system. As an IoT system by design, KEDA thus provides efficient processing pipelines and adjusts to data-driven events.

Conclusion

The scalability of Kubernetes is further enhanced with KEDA. If advanced scaling techniques are used, it allows organisations to scale resources intelligently depending on events that occur in real time. Thanks to KEDA, the application can scale swiftly, affordably, and with customer demand in mind by utilising configurable metrics, idle scaling, and burst management. Based on event-driven scheduling, KED enhances the native Kubernetes methodology, allowing developers to focus on creating user-satisfying high-performance applications.